Sergey - stock.adobe.com

Transformer neural networks are shaking up AI

Introduced in 2017, transformers were a breakthrough in modeling language that enabled generative AI tools such as ChatGPT. Learn how they work and their uses in enterprise settings.

First introduced in 2017, the transformer neural network architecture paved the way for the recent boom in generative AI services built on large language models such as ChatGPT.

This new neural network architecture brought major improvements in efficiency and accuracy to natural language processing (NLP) tasks. Complementary to other types of algorithms such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), the transformer architecture brought new capabilities to machine learning.

Transformers initially caught on because of their impressive ability to translate nuances across languages. However, as recent generative AI developments have demonstrated, transformers can also help LLMs produce lengthy responses, translate data across formats and discern relationships across multiple documents.

The latest innovation in the area of transformers, called multimodal AI, can associate patterns and relationships across multiple modalities of data, such as text, code, images, audio or robot instructions. This enables more efficient coding, faster creative work and improved analysis. Multimodal transformers are also powering the creation of more robust transcription and text-to-speech generation tools.

But while transformers are good at associating relationships, they lack reasoning and understanding. They are notoriously opaque, which can confound efforts to identify and fix problems. Transformers are also prone to hallucinations -- something that often produces interesting results when creating art, but can be catastrophic when making important decisions.

"Transformers move us beyond AI applications that find patterns within existing data or learn from repetition into AI that can learn from context and create new information," said Josh Sullivan, co-founder and CEO at production machine learning platform Modzy. "I think we're only seeing the tip of the iceberg in terms of what these things can do."

In the short term, vendors are finding ways to weave some of the larger transformer models for NLP into various commercial applications. Researchers are also exploring how these techniques can be applied across a wide variety of problems, including time series analysis, anomaly detection, label generation and optimization. Enterprises, meanwhile, face several challenges in commercializing these techniques, including meeting the computation requirements and addressing concerns about bias.

The debut of transformer neural networks

Interest in transformers first took off in 2017, when Google researchers reported a new technique that used a concept called attention in the process of translating languages. Attention refers to a mathematical description of how parts of language -- such as words -- relate to, complement and modify each other.

Google developers highlighted this new technique in the seminal paper "Attention Is All You Need," which showed how a transformer neural network was able to translate between English and French with more accuracy and in only a quarter of the training time compared with other neural nets.

Since then, researchers have continued to develop transformer-based NLP models, including OpenAI's GPT family; Google's Bert, Palm and Lamda; Meta's Llama; and Anthropic's Claude. These models have significantly improved accuracy, performance and usability on NLP tasks such as understanding documents, performing sentiment analysis, answering questions, summarizing reports and generating new text.

The sheer amount of processing power that organizations such as Google and OpenAI have thrown at their transformer models is often highlighted as a major factor in their limited applicability to the enterprise. But now that these foundation models have been built, they could dramatically reduce the efforts of teams interested in refining these models for other applications, said Bharath Thota, partner in the analytics practice of Kearney, a global strategy and management consulting firm.

"With the pre-trained representations, training of heavily engineered task-specific architectures is not required," Thota said. Transfer learning techniques make it easier to reuse the work by companies such as Google and OpenAI.

Transformers vs. CNNs and RNNs

Transformers combine some of the benefits traditionally seen in CNNs and RNNs, two of the most common neural network architectures used in deep learning. A 2017 analysis showed that CNNs and RNNs were the predominant neural nets used by researchers -- accounting for 10.2% and 29.4%, respectively, of papers published on pattern recognition -- while the nascent transformer model was then at 0.1%.

CNNs are widely used for recognizing objects in pictures. They make it easier to process different pixels in parallel to tease apart lines, shapes and whole objects. But they struggle with ongoing streams of input, such as text.

Researchers have thus often addressed NLP tasks using RNNs, which are better at evaluating strings of text and other ongoing streams of data. RNNs work well when analyzing relationships among words that are close together, but they lose accuracy when modeling relationships among words at opposite ends of a long sentence or paragraph.

To overcome these limitations, researchers glommed on to other neural network processing techniques, such as long short-term memory networks, to increase the feedback between neurons. These new techniques improved the performance of the algorithms, but did not improve the performance of models used to translate longer text.

Researchers began exploring ways of connecting neural network processors to represent the strength of connection between words. The mathematical modeling of how strongly certain words are connected was dubbed attention.

At first, the idea was that attention would be added as an extra step to help organize a model for further processing by an RNN. However, Google researchers discovered that they could achieve better results by throwing out the RNNs altogether and instead improving how the transformers modeled the relationships among words.

"Transformers use the attention mechanism, which takes the context of a single instance of data and encodes its context ... capturing how any given word relates to other words that come before and after it," said Chris Nicholson, a venture partner at early-stage venture firm Page One Ventures.

Transformers look at many elements, such as all the words in a text sequence, at one time, while also paying closer attention to the most important elements in that sequence, explained Veera Budhi, CTO at financial advice platform Habits. "Previous approaches could do one or the other, but not both," he said.

This gives transformers two key advantages over other models such as CNNs and RNNs. First, they are more accurate because they can understand the relationships among sequential elements that are far from each other. Second, they are fast at processing sequences because they pay more attention to a sequence's most important parts.

Transformers: An evolution in language modeling

At this point, it's helpful to take a step back to consider how AI models language. Words need to be transformed into some numerical representation for processing. One approach might be to simply give every word a number based on its position in the dictionary. But that approach wouldn't capture the nuances of how the words relate to each other.

"This doesn't work for a variety of reasons, primarily because the words are represented as a single entry without looking at their context," said Ramesh Hariharan, CTO at Strand Life Sciences and adjunct professor at the Indian Institute of Science.

Researchers discovered that they could elicit a more nuanced view by capturing each word in vector format to describe how it relates to other words and concepts. For a simple example, the word king might be expressed as a two-dimensional vector representing a male that is a head of state.

In practice, researchers have found ways to describe words with hundreds of dimensions representing a word's closeness to the meanings and uses of other words. For example, depending on context, the word line can refer to the shortest distance between two points, a scheduled sequence of train stops or the boundary between two objects.

Before the Google breakthrough, words were encoded into these vectors and then processed by RNNs. But with transformers, the process of modeling the relationships among words became the main event. This enabled researchers to take on tasks for disambiguating the significance of words that might have different meanings, known as polysemes.

For example, humans typically have no trouble distinguishing what it means in each of the following two sentences:

The animal did not cross the road because it was too tired.

The animal did not cross the road because it was too wide.

But before the introduction of transformers, RNNs struggled with whether the word it referred to the animal or the road in these sentences. Attention made it easier to create a model that strengthened the relationships among certain words in the sentence.

"Attention is a mechanism that was invented to overcome the computational bottleneck associated with context," Hariharan said. For example, the word tired is more likely to describe an animal, whereas wide is more likely to describe a road.

These complex neural attention networks not only remember the nearby surrounding words, but also connect words that are much further away in the document, encoding the complex relationships among the words. Attention upgrades traditional vectors used to represent words in complex hierarchical contexts.

Pre-trained transformer models

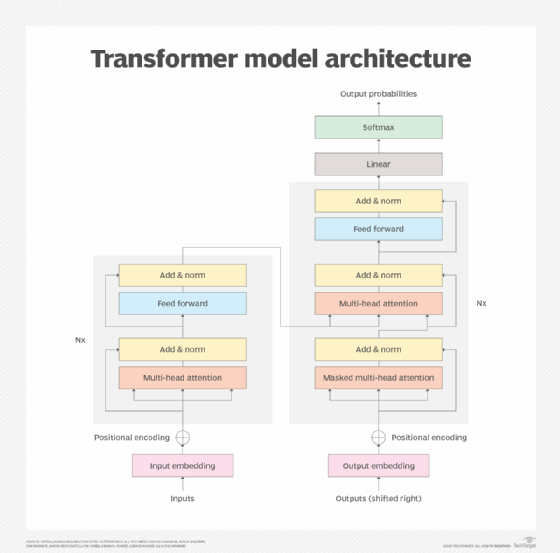

Large-scale pre-trained transformer models are provided to developers via these three fundamental building blocks:

- Tokenizers, which convert raw text to encodings that can be processed by algorithms.

- Transformers, which transform encodings into contextual embeddings, or vectors representing how the encoding relates to related concepts.

- Heads, which use contextual embeddings to make a task-specific prediction. These are the hooks programmers use to connect predictions about a word to likely next steps, such as the completion of a sentence.

Transformer applications span industries

Transformers are enabling a variety of new AI applications and expanding the performance of many existing ones.

"Not only have these networks demonstrated they can adapt to new domains and problems, they also can process more data in a shorter amount of time and with better accuracy," Sullivan said.

For example, after the introduction of transformers, a team of Google DeepMind researchers developed AlphaFold, a new transformer technique for modeling how amino acid sequences fold into the 3D shapes of proteins. AlphaFold vastly accelerated efforts to understand cell configuration and discover new drugs, leading to a renaissance in life sciences research and AI-powered drug development.

Transformers can work with virtually any kind of sequential data -- genes; molecular proteins; code; playlists; and online behaviors such as browsing history, likes or purchases. Transformers can predict what will happen next or analyze what is happening in a specific sequence. They can extract meaning from gene sequences, target ads based on online behavior or generate working code.

In addition, transformers can be used in anomaly detection, where applications range from fraud detection to system health monitoring and process manufacturing operations, said Monte Zweben, co-founder and CEO of industrial troubleshooting software vendor ControlRooms.

Transformers make it easier to tell when an anomaly arises -- without building a classification model that needs labeling -- because the transformer can detect the difference between the new representation created by the transformer and others. It can also flag an event that falls outside normal boundaries in a way that is not apparent when looking at the initial view of representing events.

Budhi expects to see many enterprises fine-tuning transformer models for NLP applications. These industry use cases include the following:

- Healthcare, such as analyzing medical records.

- Law, such as analyzing legal documents.

- Virtual assistants, such as improving understanding of user intentions.

- Marketing, such as finding out how users feel about a product using text or speech.

- Medical, such as analyzing drug interactions.

Transformers' challenges and limitations

The No. 1 challenge in using transformers is their sheer size, which means that massive processing power is required to build the largest models. Although large transformer models are offered as commercial services, enterprises will still face challenges customizing their hyperparameters for business problems to produce appropriate results, Thota said.

In addition, many of these models have various limitations for enterprise developers. Early versions could only work with 512 characters at a time, requiring developers to trim text into smaller sections or divide prompts into multiple inputs.

Over time, innovators have found various workarounds. Today's language models themselves can now often support more than 60,000 characters, and tools such as LangChain can automate the process of combining text for longer queries. In addition, vector databases provide an intermediate tier for managing large volumes of pre-processed data for even long queries.

Hariharan cautioned that teams also need to watch for bias in using these models. "The models are biased because all the training data are biased, in some sense," he said. This can have serious implications as enterprises work on establishing trustworthy AI practices.

Teams also must be alert to errors that differ from those they might have observed with other neural network architectures. "These are still early days, and as we start using transformers for larger data sets and for forecasting multiple steps, there is a higher chance of errors," said Monika Kochhar, CEO and co-founder of online gifting platform SmartGift.

For example, many models end up wasting attention on tracking connections that are never used. As AI scientists figure out how to get around these constraints, models are likely to become faster and simpler.

Scott Boettcher, director at digital consultancy Perficient, said many NLP applications built on transformers are getting much better than before. But they still cannot actually reason -- and subtle changes in context can trip them up in sometimes laughable ways, with potentially severe consequences.

Transformers and the future of generative AI

There is a lot of excitement about using transformers for new types of generative AI applications. OpenAI's transformer-based model GPT-4 has showcased a promising ability to generate text on the fly.

Transformers are already making it easier to extend techniques such as generative adversarial networks and expand into new domains. The next frontier could lie in combining multiple transformers and other techniques to minimize risk and increase reliability.

Enterprises will also need to invest in fine-tuning and testing novel failure modes and risks. At the cutting edge, autonomous AI built on collaborative and adaptive transformer models could help turbocharge efforts to build autonomous enterprises.

Transformers are perhaps the most important technology in the recent generative AI boom, but we are still in the early phases of understanding how they can break and the risks that they entail. As powerful as transformers' benefits are, enterprises must also ensure they are prioritizing trustworthy AI principles as part of their adoption.

George Lawton is a journalist based in London. Over the last 30 years he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.