adversarial machine learning

What is adversarial machine learning?

Adversarial machine learning is a technique used in machine learning (ML) to fool or misguide a model with malicious input. While adversarial machine learning can be used in a variety of applications, this technique is most commonly used to execute an attack or cause a malfunction in a machine learning system. The same instance of an attack can be changed easily to work on multiple models of different data sets or architectures.

Machine learning models are trained using large data sets pertaining to the subject under study. For example, if an automotive company wanted to teach its automated car how to identify a stop sign, it would feed thousands of pictures of stop signs through a machine learning algorithm.

An adversarial ML attack might manipulate the input data; in this case providing images that aren't stop signs but are labeled as such. The algorithm misinterprets the input data, causing the overall system to misidentify stop signs when the application using the machine learning data is deployed in practice or production.

How adversarial machine learning attacks work

Malicious actors carry out adversarial attacks on ML models. They have various motivations and a range of tactics. However, their aim is to negatively impact the model's performance, so it misclassifies data or makes faulty predictions. To do this, attackers either manipulate the system's input data or directly tamper with the model's inner workings.

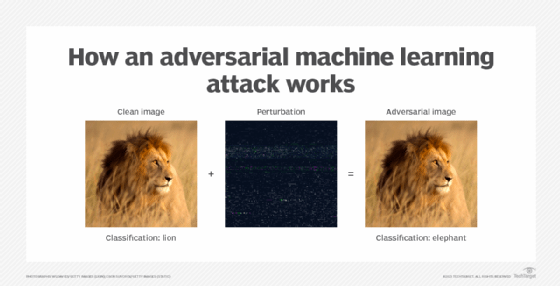

In the case of manipulated or corrupted input data, an attacker modifies an input -- such as an image or an email -- by introducing perturbances or noise. These modifications are subtle and can fool a model into wrongly concluding that data should be classified in a way that isn't correct or that isn't deemed threatening. Attackers can corrupt a model during training, or they can target a pretrained model that's already deployed.

When attackers target an unsecured model, they can access and alter its architecture and parameters so that it no longer works as it should. These attacks have gotten more sophisticated over time, so artificial intelligence (AI) experts are increasingly wary of them and advise possible countermeasures.

Types of adversarial machine learning attacks

There are three main categories of adversarial ML attacks. They are carried out differently but share the same goal of corrupting ML models for malicious purposes. They include the following:

- Evasion attack. This is the most common type of attack variant. Input data, such images, are manipulated to trick ML algorithms into misclassifying them. By introducing subtle yet deliberate noise or perturbations into the input data, attackers cause this misclassification.

- Data poisoning. These attacks occur when an attacker modifies the ML process by placing bad or poisoned data into a data set, making the outputs less accurate. The goal of a poisoning attack is to compromise the machine learning process and minimize the algorithm's usefulness.

- Model extraction or stealing. In an extraction attack, an attacker prods a target model for enough information or data to create an effective reconstruction of that model or steal data that was used to train the model. To prevent this type of attack, businesses must harden their ML systems.

There are several methods attackers can use to target a model. These methods take the following approaches:

- Minimizing perturbances. Attackers use the fewest perturbations possible when corrupting input data to make their attacks nearly imperceptible to security personnel and the ML models themselves. Attack methods that take this approach include limited-memory Broyden-Fletcher-Goldfarb-Shanno, DeepFool, Fast Gradient Sign Method and Carlini-Wagner attacks.

- Generative adversarial networks. GANs create adversarial examples intended to fool models by having one neural network -- the generator -- generate fake examples and then try to fool another neural network -- the discriminator -- into misclassifying them. While the discriminator gets better at classifying over time, a GAN also gets better and dangerously good at generating fake data.

- Model querying. This is where an attacker queries or probes a model to discover its vulnerabilities and shortcomings, then crafts an attack that exploits those weaknesses. An example of this approach is the zeroth-order optimization method. It gets its name because it lacks information about the model and must rely on queries.

Defenses against adversarial machine learning

AI and cybersecurity experts view adversarial ML as a growing threat that can exploit common vulnerabilities in ML systems. Even state-of-the-art AI systems have been fooled by malicious actors.

Currently, there isn't a definitive way to defend against adversarial ML attacks. However, there are a few techniques that ML operations teams can use to help prevent this type of attack. These techniques include adversarial training and defensive distillation.

Adversarial training

Adversarial training is a process where examples of adversarial instances are introduced to the model and labeled as threatening. This process helps prevent adversarial attacks from occurring, but it requires ongoing maintenance efforts from data science experts and developers tasked with overseeing it.

Defensive distillation

Defensive distillation makes an ML algorithm more flexible by having one model predict the outputs of another model that was trained earlier. When trained with this approach, an algorithm can identify unknown threats.

Defensive distillation is similar to GANs, which uses two neural networks together to speed up ML processes. One model is called the generator; it creates fake content resembling real content. The other is called the discriminator; it learns to identify and flag fake content with increased levels of accuracy over time.

Adversarial white box vs. black box attacks

Adversarial ML can be considered as either a white or black box attack. In a white box attack, the attacker accesses model parameters and architecture. These are also known as the inner workings of a model that are maliciously tweaked or altered to produce faulty outputs.

In a black box attack, the attacker doesn't have access to the inner workings of the model and can only know its outputs. While protecting a model's inner workings can prevent attackers from accessing them or make their attempts less effective, they don't make trained models impervious to attacks. With everything protected except outputs, attackers can still probe a black box model and extract enough sensitive data from it.

Examples of adversarial machine learning

Specific adversarial examples often won't confuse humans but will confuse ML models. While a person can read what a sign says or see what a picture depicts, computers can be fooled. Hypothetical examples of adversarial attacks include the following:

Images. An image is fed to an ML model as its input, but attackers tamper with the input data connected to the image, introducing noise. As a result, an image of a lion gets misclassified as an elephant. This type of image classification attack is also known as an evasion attack because instead of directly tampering with training data or using another blatant approach, it relies on subtle modifications to inputs that are designed to evade detection.

Emails. Spam and malware emails can appear as benign, masking malicious intent. The spam or malware tricks the ML algorithm into incorrectly classifying incoming emails as safe or not spam.

Signs. A self-driving car uses sensors and ML algorithms to detect and classify objects. However, even a minor change to a stop sign -- such as a small sticker -- can cause the vehicle's classifier to misinterpret the sign and potentially cause an accident.

History of adversarial machine learning

Various computer science researchers developed the concept of machine learning -- and its implementations like neural networks -- throughout the 20th century. For instance, British-Canadian cognitive psychologist and computer scientist Geoffrey Hinton made significant contributions on training deep neural networks in the 1980s. Adversarial attacks were theoretical back then and didn't raise real concerns. It wasn't until 2004 that researchers, such as Nilesh Dalvi, currently the CTO of Fiddler AI, discovered vulnerabilities in spam filter classifiers that could be exploited in sophisticated attacks.

Ten years later, experts said that even advanced classifiers, such as deep neural networks and support vector machines, were susceptible to adversarial attacks. Today, big tech companies like Microsoft and Google are taking preventive measures. For example, they're making their code open source so other experts can assist in detecting vulnerabilities. This helps in identifying ways to further ensure that ML models are strong enough to resist adversarial attacks.

AI and ML play a significant role in enterprises' cybersecurity strategies. Learn how enterprises should evaluate the risks and benefits of AI in cybersecurity.