6 ways to reduce different types of bias in machine learning

As adoption of machine learning grows, companies must become data experts or risk results that are inaccurate, unfair or even dangerous. Here's how to combat machine learning bias.

As companies step up the use of machine learning-enabled systems in their day-to-day operations, they've become increasingly reliant on those systems to help make critical business decisions. In some cases, the machine learning systems operate autonomously, making it especially important that the automated decision-making works as intended.

However, machine learning-based systems are only as good as the data used to train them. In modern machine learning training, developers are finding that bias is endemic and difficult to get rid of. In fact, machine learning depends on algorithmic biases to determine how to classify information and data sets. In some situations, these sorts of biases can be useful, but bias can also be harmful.

On the useful side, organizations using machine learning to optimize merchandise storage rely on biases based on merchandise size and weight. On the harmful side, biases related to race, gender or ability are present in machine learning models. When bad biases are used to feed a machine learning algorithm, systems can be viewed as untrustworthy, inaccurate and potentially harmful.

In this article, you'll learn why bias in AI systems is a cause for concern, how to identify different types of biases and six effective methods for reducing bias in machine learning.

Why is eliminating bias important?

The power of machine learning comes from its ability to learn from data and apply that learning experience to new data that a system has never seen before. However, one of the challenges data scientists have is ensuring the data fed into machine learning algorithms is not only clean, accurate and -- in the case of supervised learning -- well labeled, but it's also free of any inherently harmful biased data that could skew machine learning results.

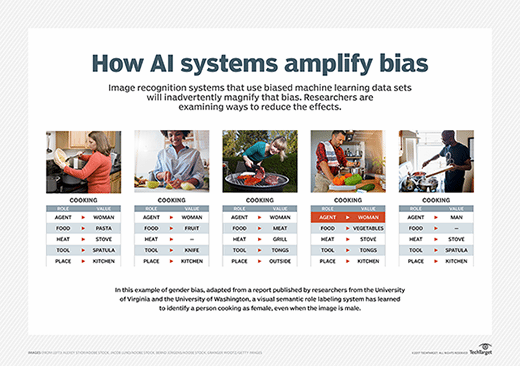

The power of supervised learning, one of the core approaches to machine learning, depends heavily on the quality of the training data. So, it should be no surprise that when biased training data is used to teach these systems, the results are biased AI systems. Biased AI systems that are implemented can cause problems, especially when used in automated decision-making systems, autonomous operations and facial recognition software to make predictions about or render judgment on individuals.

Some notable examples of the bad outcomes algorithmic bias has caused include a Google image recognition system that misidentified images of minorities in an offensive way, automated credit applications from Goldman Sachs that sparked an investigation into gender bias and a racially biased AI program used to sentence criminals.

These kinds of mistakes can hurt individuals and businesses in the following ways:

- Biased facial recognition technology can lead to false assumptions and accusations about customers and other individuals involved with a business. It can also be the source of embarrassing or mistaken marketing messages.

- Bias blunders can lead to reputational and subsequent financial harm.

- Organizations can end up over- or undersupplied with raw materials or inventory because of poor customer demand forecasts.

- Mistakes can lead to low levels of trust in machine learning and resistance to AI adoption.

- Inaccurate classifications of people can lead to unfair denials of applications for loans, credit and other benefits, which in turn can result in problems with regulatory laws and compliance rules.

Enterprises must be hypervigilant about machine learning bias: Efficiency and productivity value delivered by AI and machine learning systems will be wiped out if the algorithms discriminate against individuals and subsets of the population.

However, AI bias isn't limited to discrimination against individuals. Biased data sets can jeopardize business processes when applied to objects and data of all types. For example, take a machine learning model that was trained to recognize wedding dresses. If the model was trained using Western data, then wedding dresses would be categorized primarily by identifying shades of white. This model would fail in non-Western countries where colorful wedding dresses are more commonly accepted. Errors also abound where data sets have bias in terms of the time of day when data was collected, the condition of the data and other factors.

All of the examples described above represent some sort of bias that was introduced by humans as part of their data selection and identification methods for training the machine learning model. The systems technologists build are necessarily colored by their own experiences. As a result, they must be aware that their individual biases can jeopardize the quality of the training data. Individual bias, in turn, can easily become systemic bias as bad predictions and unfair outcomes become part of the automation process.

How to identify and measure AI bias

Part of the challenge of identifying bias is that it's difficult to see how some machine learning algorithms generalize their learning from the training data. In particular, deep learning algorithms have proven to be remarkably powerful in their capabilities. Their use in neural networks requires large quantities of data, high-performance compute power and a sophisticated approach to efficiency, resulting in machine learning models with profound abilities.

However, much of deep learning is a "black box." It's not clear how a neural network predictive model arrives at an individual decision. You can't simply query the system and determine with precision which inputs resulted in which outputs. This makes it hard to spot and eliminate potential biases when they arise in the results. Researchers are increasingly turning their focus to adding explainability to neural networks, known as explainable AI (XAI) or "white box" AI. Verification is the process of proving the properties of neural networks. However, because of the size of neural networks, it can be hard to check them for bias.

While XAI has found early use in healthcare and the military, it's still emerging. Until we have broad use of explainable systems, we must understand how to recognize and measure AI bias in black box machine learning models. Some of the biases in the data sets arise from the selection of training data sets. The model needs to represent the data as it exists in the real world. If your data set is artificially constrained to a subset of the population, you will get skewed results in the real world, even if it performs well against training data. Likewise, data scientists must take care in how they select data to include in a training data set, and which features and dimensions are included in the training data.

To combat inherent data bias, companies are implementing programs to broaden the diversity of their data sets and the diversity of their employees. More diversity among staff members means people of many perspectives and varied experiences are feeding systems the data points to learn from. Unfortunately, the tech industry is homogeneous -- there aren't many women or people of color in the field. Efforts to diversify teams should also have a positive effect on the machine learning models produced, since data science teams will be better able to understand the requirements for more representative data sets.

Different types of machine learning bias

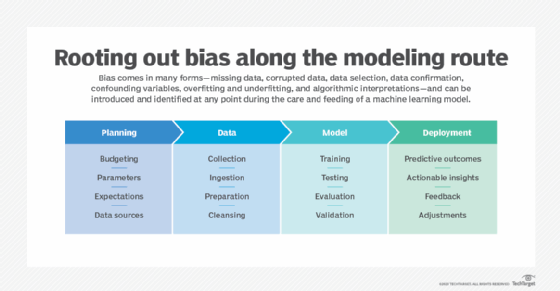

Some of the sources of machine learning model bias are represented in the data that's collected, and others in the methods used to sample, aggregate, filter and enhance that data.

- Sampling bias. One common form of bias results from mistakes made in data collection. A data sampling bias happens when data is collected in a manner that oversamples from one community and undersamples from another. This might be intentional or unintentional. The result is a model that is overrepresented by a particular characteristic and, as a result, is weighted or biased in that way. The ideal sampling should either be completely random or match the characteristics of the population to be modeled.

- Measurement bias. Measurement bias results from not accurately measuring or recording the data that has been selected. For example, if you're using salary as a measurement, there might be differences in salary related to bonuses and other incentives, or regional differences might affect the data. Other measurement bias can result from using incorrect units, normalizing data in incorrect ways and making miscalculations.

- Exclusion bias. Similar to sampling bias, exclusion bias arises from data that's inappropriately removed from the data source. When you have petabytes or more of data, it's tempting to select a small sample to use for training -- but in doing so, you might inadvertently exclude certain data, resulting in a biased data set. Exclusion bias also happens when duplicates are removed from data where the data elements are actually distinct.

- Experimenter or observer bias. Sometimes, the act of recording data itself can be biased. When recording data, the experimenter or observer might only record certain instances of data, skipping others. Perhaps a machine learning model is created based on sensor data, but sampling is done every few seconds, missing key data elements. There could also be some other systemic issue in the way the data has been observed or recorded. In some instances, the data itself might even become biased by the act of observing or recording that data, which could trigger behavioral changes.

- Prejudicial bias. One insidious form of bias has to do with human prejudices. In some cases, data might become tainted by bias based on human activities that underselected certain communities and overselected others. When using historical data to train models, especially in areas that have been rife with prejudicial bias, care must be taken to ensure new models don't incorporate that bias.

- Confirmation bias. Confirmation bias is the desire to select only information that supports or confirms something you already know, rather than data that might suggest something that runs counter to preconceived notions. The result is data that's tainted because it was selected in a biased manner or because information that doesn't confirm the preconceived notion is thrown out.

- Bandwagoning or bandwagon effect. The bandwagon effect is a form of bias that happens when a trend occurs in the data or in some community. As the trend grows, the data supporting that trend increases, and data scientists run the risk of overrepresenting the idea in the data they collect. Moreover, any significance in the data might be short-lived: The bandwagon effect could disappear as quickly as it appeared.

No doubt, there are other types of bias that might be represented in a data set than just the ones listed above. All possible forms of bias should be identified early in a machine learning project.

The history of machine learning

The history of machine learning began with attempts to map human neural processes and has led to major breakthroughs and discoveries. Read about the history of machine learning.

6 ways to reduce bias in machine learning

Developers take the following steps to reduce machine learning bias:

- Identify potential sources of bias. Using the above sources of bias as a guide, one way to address and mitigate bias is to examine the data and see how the different forms of bias could affect it. Have you selected the data without bias? Have you checked for any bias arising from errors in data capture or observation? Have you ensured you aren't using a historic data set tainted with prejudice or confirmation bias? By asking these questions, you can help to identify and potentially eliminate that bias.

- Set guidelines and rules for eliminating bias and procedures. To keep bias in check, organizations should set guidelines, rules and procedures for identifying, communicating and mitigating potential data set bias. Forward-thinking organizations are documenting cases of bias as they occur, outlining the steps taken to identify bias and the efforts made to mitigate it. By establishing these rules and communicating them in an open, transparent manner, organizations begin to address issues of machine learning model bias.

- Identify accurate representative data. Prior to collecting and aggregating data for machine learning model training, organizations should first try to understand what a representative data set would look like. Data scientists must use their data analysis skills to understand the population that's to be modeled, along with the characteristics of the data used to create the machine learning model. These two things should match to build a data set with as little bias as possible.

- Document and share how data is selected and cleansed. Many forms of bias occur when selecting data from among large data sets and during data cleansing operations. To ensure few bias-inducing mistakes are made, organizations should document their methods of data selection and cleansing. They should also let others examine the models for any form of bias. Transparency allows for root cause analysis of sources of bias to be eliminated in future model iterations.

- Screen models for bias as well as performance. Machine learning models are often evaluated prior to being placed into operation. Most of the time, these evaluations focus on accuracy and precision when judging model performance. However, organizations should also add measures of bias detection in these evaluations. Even if the model performs with an acceptable level of accuracy and precision for particular tasks, it could fail on measures of bias, which might point to issues with the training data.

- Monitor and review models in operation. Finally, there's a difference between how the machine learning model performs in training and how it performs in the real world. Organizations should provide methods to monitor and continuously review the model's operational performance. If there are signs of bias, then the organization can take action before it causes irreparable harm.

How to improve fairness

In machine learning and AI, fairness refers to models that are free from algorithmic biases in their design and training. A fair machine learning model is one that's trained to make unbiased decisions.

Before identifying how to improve fairness, it's important to understand the different kinds of fairness used in machine learning. While different fairness types can be used in tandem with others, they can differ depending on which aspect of the machine learning model they target, such as the algorithm or the underlying data itself.

Different kinds of fairness

Some frequently used fairness classifications include the following:

- Predictive fairness. Also known as predictive parity, this type of fairness focuses on machine learning algorithms. It ensures similar predictions across all groups or classifications of people or whatever entity is being modeled. This method ensures a model's predictions have no systematic differences based on identifiers such as race, age, gender or disability.

- Social fairness. Social fairness, or demographic parity, requires the model to make decisions unrelated to attributes of gender, race or age. This ensures each group has the same probability of true positive rates -- or the rate of correct predictions -- based on its proportional representation in a demographic.

- Equal opportunity. This is an algorithmic type of fairness that ensures each group has essentially the same true positive rate generated by the model.

- Calibration. Calibration is a fairness constraint that ensures an equal number of false positive and negative predictions is generated for each group in a model.

With these classifications in mind, the fairness of machine learning models can be improved in several ways. Many techniques aimed at improving fairness come into play during the model's training, or processing, stage. It's important that businesses use models that are as transparent as possible to understand exactly how fair their algorithms are.

Techniques to improve fairness

Some of the most effective techniques used to improve fairness in machine learning include the following:

- Feature blinding. Feature blinding involves removing attributes as inputs in models -- in other words, blinding the model to specific features or protected attributes such as race and gender. However, this method isn't always sufficient, as other attributes can remain that correlate with different genders or races, enabling the model to develop a bias. For instance, certain genders might correlate with specific types of cars.

- Monotonic selective risk. Recently developed by MIT, this method is based on the machine learning technique of selective regression. Selective regression allows a machine learning model to decide whether it makes a prediction based on the model's own confidence level. This model is known for underserving poorly represented subgroups that might not have enough data for a model to feel confident making a prediction. To correct for this inborn bias, MIT developed monotonic selective risk, which requires the mean squared error for every group to decrease evenly, or monotonically, as the model improves. As a result, the more the error rate increases in accuracy, the more the performance of every subgroup improves.

- Objective function modification. Every machine learning model is optimized for an objective function, such as accuracy. Objective function modification focuses on altering or adding to an objective function to optimize for different metrics. In addition to accuracy, a model can be optimized for demographic parity or equality of predictive odds.

- Adversarial classification. Adversarial classification involves optimizing a model not just for accurate predictions, but also inaccurate predictions. Though it might sound counterintuitive, poor predictions point out weak spots in a model, and then the model can be optimized to prevent those weaknesses.

The role of algorithms in bias

Algorithmic biases can spell disaster for machine learning models and AI technology. Go more in-depth on AI algorithms and how to combat biases within them.

Will we ever be able to stamp out bias in machine learning?

Unfortunately, bias is likely to remain a part of modern machine learning. Machine learning is biased by definition: Its predictive and decision-making capabilities require prioritizing specific attributes over others. For instance, a good model must bias the prevalence of breast cancer toward a female population or prostate cancer toward a male population. This type of acceptable bias is important to the proper functioning of machine learning.

But here's where it's vital to understand the difference between acceptable bias and harmful bias. The previous examples are types of acceptable, practical biases that aid the accuracy of machine learning. Harmful bias, on the other hand, can harm the individuals it's applied to and do reputational harm to the companies employing it. It can lead to dead-wrong machine learning models.

Several prominent studies including one from MIT Media Lab have shown that AI-based facial recognition technologies don't recognize women and people of color as well as they do white men. This has led to false arrests, harm to wrongly detained individuals and failure to detain guilty parties. It's imperative that machine learning researchers and developers stamp out this kind of bias.

This article has shown several ways harmful bias can be reduced and fairness increased in machine learning models. However, it remains an open question whether harmful bias can be completely removed from machine learning. As it currently stands, even unsupervised machine learning relies on human interaction in some form -- a dynamic that opens models up to human error.

A future of completely unbiased machine learning might be possible if artificial superintelligence (ASI) turns out to be science rather than science fiction. It's predicted that if ASI were to become reality, it would transcend the abilities and intelligence of humans and human biases, and be able to design perfect predictive models free of any harmful biases.